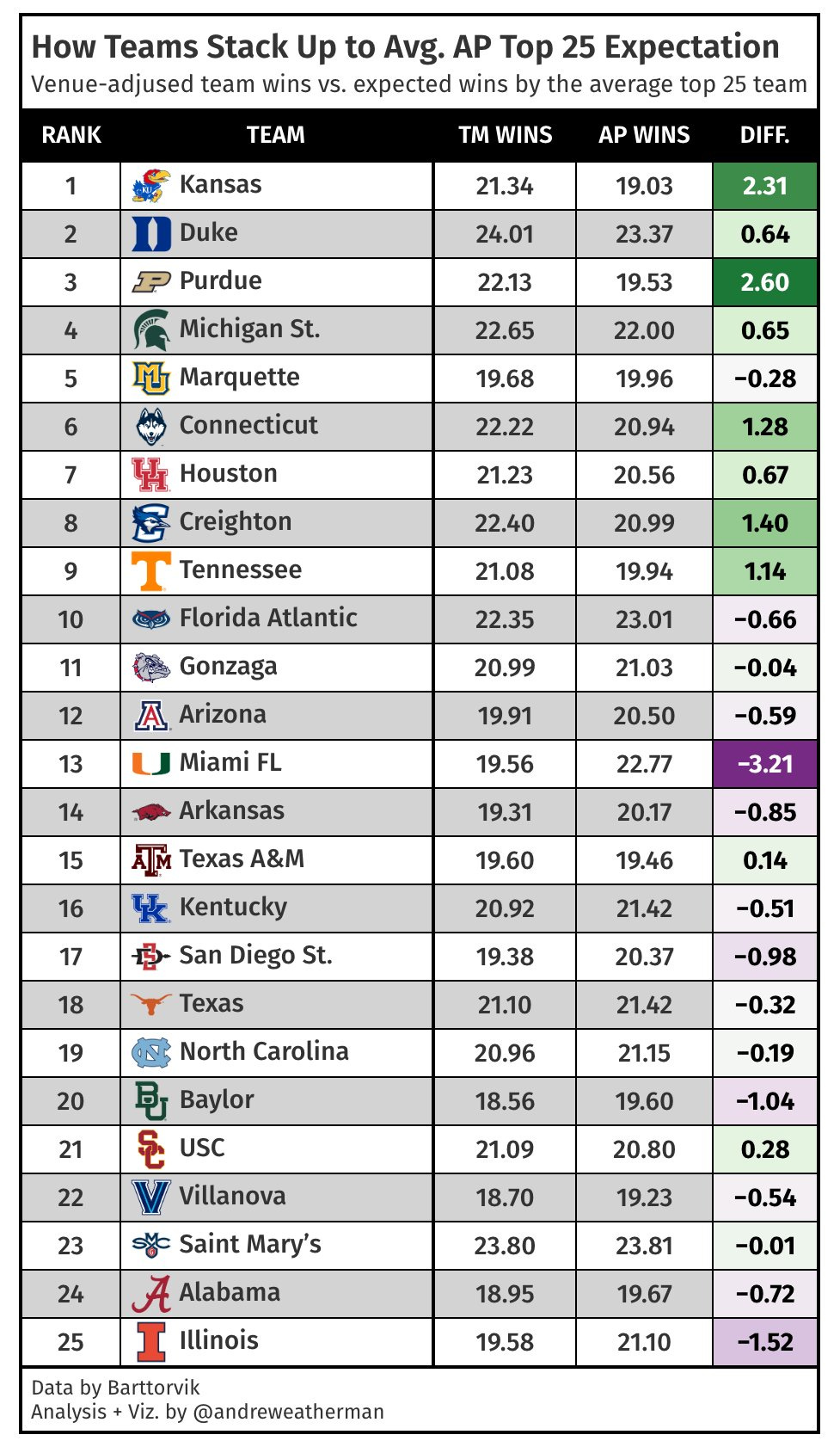

R Tutorial: Performance Against AP T-25 Expectation

Learning how to estimate performance against AP T-25 expectation in college basketball using R and open-source data

Welcome to the first installment of Buckets & Bytes! Today, we’ll be learning how to use open-source data to answer the question:

How many games would the average AP Top 25 team be expected to win against the schedule of each ranked team?

What does this mean?

We will use Barttorvik data (T-Rank; a leading college basketball metric) to compute venue-adjusted win totals for each AP Preseason Top 25 team and then compare to how the average ranked team would be expected to perform against the exact same schedule. We will walk through the process of how to visualize our results in the table below, all using R and open-source data!

This code will work throughout the season, so keep it handy if you want to create similar graphics as the season progresses!

How do I use Buckets & Bytes

Buckets & Bytes aims to provide a comprehensive code walkthrough, from start to finish, using engaging data. For some, the code discussion might not be necessary. For others, I hope you find some value in this tutorial. Our walkthrough is spread across three sections: Extraction, analysis, and visualization.

If you are just interested in the full source code, that can be found here and at the bottom of the article.

What do I need to know?

Each post in this series will assume different levels of R and analytics knowledge. Today’s code will use rvest, dplyr, purrr, and gt — but you should have a foundational understanding of R and the tidyverse. I will provide detailed explanations at times but might gloss over true beginner concepts.

Part 1: Data Extraction

You can run this code to install and load all necessary packages.

needed_packages <- c('tidyverse', 'rvest', 'gt', 'gtExtras', 'withr')

installed_packages <- needed_packages %in% rownames(installed.packages())

if (any(installed_packages == FALSE)) {

install.packages(packages[!installed_packages])

}

invisible(lapply(needed_packages, library, character.only = TRUE))Grabbing the season schedule

The first thing we must do is grab the season schedule. Luckily, Barttorvik has this easily accessible in .CSV form — so we can simply pass that url to the read_csv function. Importantly, the loaded CSV has no pre-defined column headers, so to ensure that R does not infer the first row to be the column headers, causing us to lose one game, we need to specify `col_names = FALSE` and manually set our own headers. Once we do that, let’s simplify some things and only choose the needed columns (neutral, home, and away).

Important: If you are on Windows, you will need to run this line before you try to scrape the website to avoid 403 errors. For some reason, his site blocks the User-Agent of Windows machines.

withr::local_options(HTTPUserAgent='Buckets & Bytes')

withr::local_options(HTTPUserAgent='Buckets & Bytes')

schedule <- read_csv("https://barttorvik.com/2024_master_sked.csv", col_names = FALSE) %>%

setNames(c('game_id', 'game_date', 'game_type', 'neutral', 'away', 'home')) %>%

select(neutral, home, away)Our `schedule` data should look like this. `neutral` is essentially a boolean column, with 1 indicating a neutral-site game.

Scraping preseason team ratings

Next up: Web scraping! Now that we have our season schedule, we need to have some reference point for team strength: For this visualization, we are going to stick with Barttorvik and use his T-Rank metric rating. Lucky for us, this metric is easily accessible on the home page!

Navigating to it, we can see that the data is nicely visualized inside an HTML table. If you are new to web scraping in R, a general rule of thumb is that if data is contained inside a static HTML table, the html_table() function in `rvest` should be able to retrieve it. Let’s check:

I have run into a few issues in the past scraping his website on Windows. If you run into connection errors, you can pull in the data using this code. You will then be able to run across() on it below. There’s nothing we can do about connection errors.

read_csv('https://gist.github.com/andreweatherman/f7c5e850a88f22b0577599fbc9d26da9/raw/928588a1afbddf1c49266cf3b56892be6682793f/bytes_ratings_connection_error.csv')

# withr::local_options(HTTPUserAgent='Buckets & Bytes')

read_html('https://barttorvik.com/trankpre.php') %>%

html_table()When we run that code, a tibble with all data is properly returned — awesome! What you might notice, however, is that our column headers are a bit inconvenient — e.g. `Proj. Rec`, `Ret Mins`, etc. We won’t be touching any of these columns, but out of habit, I always run the clean_names() function from the `janitor` package to quickly tidy headers. Again, that line isn’t necessary for our analysis — it just renames column headers with a more friendly syntax — but my code will assume you run it.

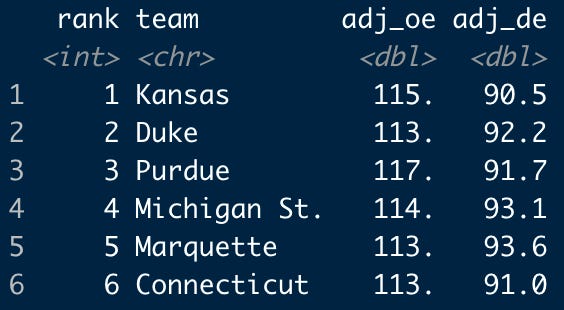

If you look closely at our data, you might notice that all columns are character types. Since we will be running quantitative analysis, we must covert a few columns to the numeric type. Specifically, we want `adj_oe` and `adj_de` to be numbers. (These represent the estimated points allowed/scored per 100 possessions, adjusted for team and opponent strength.)

We can use the `mutate` function from the `dplyr` package (part of the `tidyverse`) to essentially “modify” both columns with as.numeric(). Using mutate(), there are two ways to approach this problem. The first is to iterate over every column, one variable at a time; the second, and a more rigorous option, is to use across() to apply identical transformations to a range of columns at once. Looking back on my early days of self-teaching R, I wish that I had grasped the concept of across() sooner: The syntax might look strange at first, but trust me, it will save you loads of typing!

across() requires two arguments — the range of columns and the function(s) to apply. Diving deep into across() is out of scope for this blog entry, but let’s see how it works with our data. (`.x` is simply telling as.numeric() to run on the two referenced columns.)

ratings <- read_html('https://barttorvik.com/trankpre.php') %>%

html_table() %>%

pluck(1) %>%

janitor::clean_names() %>%

mutate(across(c(adj_oe, adj_de), ~ as.numeric(.x))) %>%

select(team, adj_oe, adj_de)

# if you run into connection errors, replace the code from read_html to clean_names with the ratings data in the link aboveA brief aside about the use of `pluck` above: html_table() returns a list. To access our tibble and perform any sort of analysis or visualization, we need to “retrieve” our data from the list (well, not really but only Sickos would reference an index position throughout this code). pluck() is a simple way to index within a list and “pluck” out the desired element. In this case, our data is just at index position 1.

A tibble for the preseason top 25 teams

Necessary for this analysis, of course, is data for the Associated Press’ ‘Preseason Top 25’ poll. Now, you could scrape this information from the poll website itself, but to be honest, it might be quicker to hard-encode the data yourself. (Importantly, we will need team names to match later on, so I decided to manually write the data.)

ap_top_25 <- tibble(

rank = 1:25,

team = c('Kansas', 'Duke', 'Purdue', 'Michigan St.', 'Marquette', 'Connecticut', 'Houston', 'Creighton', 'Tennessee',

'Florida Atlantic', 'Gonzaga', 'Arizona', 'Miami FL', 'Arkansas', 'Texas A&M', 'Kentucky', 'San Diego St.', 'Texas',

'North Carolina', 'Baylor', 'USC', 'Villanova', "Saint Mary's", 'Alabama', 'Illinois')

)Join on T-Rank ratings

Now that we have the preseason ranked teams, we can use our `ratings` table to “join” or “merge” with the `ap_top_25` data. Essentially, we want the `adj_oe` and `adj_de` of each ranked team. There are a few ways to approach this, and we are going to use the `left_join` function.

left_join() adds the columns from “y,” the second data frame, to “x,” the first data frame listed, based on matching keys. Importantly, left_join() ensures that all observations in “x” are kept, regardless of whether a match was found in “y.” For our use, it helps identify whether we misspelt a team in the manual tibble above.

At first, joins can be difficult to understand. Below is how we are structuring our code. We're combining the `ap_top_25` dataset with the `ratings` data, matching by team names. This means each team in the `ap_top_25` frame will now be supplemented with their `adj_oe` and `adj_de` values from the `ratings` table.

ap_top_25 <- left_join(ap_top_25, ratings, by = 'team')What have we done in Part 1?

Part 1 is all about data extraction with a splash of cleaning. Let’s break down what we’ve done.

We used read_csv() to access a .CSV file of the 2023-24 season schedule on the barttorvik website. We renamed and selected the necessary columns.

We used read_html() from the `rvest` package to scrape the ratings table on the barttorvik website. We introduced the clean_names() function from `janitor` and touched on how to use across() to apply the same function to a range of columns.

We manually encoded data for the preseason AP Top 25.

We used left_join() to join the ratings data with the AP Top 25 data.

Part 2: Data Analysis

That’s all the data we need! Now for the next part: Analysis! Remember that we are trying to calculate the difference in expected wins between each AP Top 25 team and the average AP Top 25 team.

Function Building

For our analysis, we are going to write a general function that adds all required information and then simply loop over it for each team. Writing functions is a good way to clean up your code and make your work reproducible.

As a note: When “team” is referenced in the explanations below, keep in mind that we are building a function. In other words, `team` is an argument that will be filled by whichever school we insert into the function. It could be Duke or Kansas or Purdue, etc.

Game location + opponent

parse_wp <- function(team) {

schedule %>%

filter(home == team | away == team) %>%

mutate(

# add game location

game_location = case_when(

home == team & neutral == 0 ~ 'home',

away == team & neutral == 0 ~ 'away',

.default = 'neutral'

),

team = team,

opponent = if_else(team == home, away, home),

avg_ap_adj_oe = mean(ap_top_25$adj_oe),

avg_ap_adj_de = mean(ap_top_25$adj_de))

# FUNCTION LOGIC WILL CONTINUE BELOW...

}Importantly, we want to adjust for venue, but our schedule data frame doesn’t have any explicit “game location” column, so we must write that logic ourselves. For this, we can use a `case_when` statement inside `mutate` — which you can think of as chaining together multiple if/else statements. The logic is simple: If the game is not at a neutral site (`neutral == 0`) and the team is listed under the `home` column, it is a home game. This is reversed for road trips. If neither condition is matched (i.e. `neutral == 1`), then case_when() will return the `.default` statement, which we have coded as ‘neutral’.

We will also create a column to indicate the opponent for each game using an `if/else` statement. Lastly, let’s go ahead and on the average AP Top 25 team’s offensive and defensive efficiency. We will use this in a later section.

Team and opponent ratings

Now let’s add the ratings that we previously scraped for both the team and their opponents. There are multiple ways to “merge” data in R, but to keep things consistent, we are again going to fall back on left_join(). Adding on team ratings is fairly straightforward.

For opponent ratings, however, we need to rename columns to specify that they indicate opponent ratings. We can quickly do this using select() with the form `new_column_name = old_column_name` (e.g. `opp_adj_oe = adj_oe`). If you’ve used SQL, you might notice that this runs parallel to SELECT old_name AS new_name.

Equality Joins

Before we can run this join, however, we need to reference the “keys” (or columns) to match on. If you look at the column names of `ratings` with `names(ratings)`, you see that we have `team`, `adj_oe`, and `adj_de`. If we do not specify the key to merge on, `left_join` will pick the only matching one — `team`. But these are opponent ratings, not the `team` rating, so we need to merge with the `opponent` column that we created in the last step.

Merging on keys with different names is called an “equality” join, and `dplyr` makes this very simple with the `join_by` function: just supply the column names and separate with `==`. If you want to learn more about joins, you can read more here!

You might notice that we could have just renamed the `team` column to `opponent` in the `select` step; in practice, this is an easier implementation, but I wanted to demonstrate a use of `join_by`.

parse_wp <- function(team) {

# ... PREVIOUS CODE ...

left_join(ratings %>% select(team, opp_adj_oe = adj_oe, opp_adj_de = adj_de), join_by('opponent' == 'team')) %>%

left_join(ap_top_25 %>% select(-rank), by = 'team')

# ... FUNCTION LOGIC WILL CONTINUE BELOW...

}Venue-adjust the ratings

Now that we have ratings and game location, let’s adjust efficiencies for each game. Barttorvik uses a 1.3% adjustment constant. For home games, offensive efficiency is multiplied by 1.013 and defensive efficiency is multiplied by 0.987. For away games, offensive efficiency is multiplied by 0.987 and defensive efficiency is multiplied by 1.013. For neutral site games, no adjustment is made. (Remember that defensive efficiency is points allowed per 100 possessions, so a lower value is better.)

Helper Function

To make our code cleaner, let’s write a “helper function” that we can apply to our data. There are ways to make this function shorter, but in effort to keep use consistent, we will stick with case_when().

adjust_efficiency <- function(df) {

adjusted <- df %>%

mutate(

# off. ratings for team and AP average (NOT opponent)

across(ends_with("oe") & !starts_with("opp"),

~ case_when(

game_location == "home" ~ . * 1.013,

game_location == "away" ~ . * 0.987,

.default = . # no change for neutral

)),

# def. ratings for team and AP average (NOT opponent)

across(ends_with("de") & !starts_with("opp"),

~ case_when(

game_location == "home" ~ . * 0.987,

game_location == "away" ~ . * 1.013,

.default = .

)),

# off. ratings for opponent (game location is switched!)

across(opp_adj_oe,

~ case_when(

game_location == "home" ~ . * 0.987,

game_location == "away" ~ . * 1.013,

.default = .

)),

# def. ratings for opponent (game location is switched!)

across(opp_adj_de,

~ case_when(

game_location == "home" ~ . * 1.013,

game_location == "away" ~ . * 0.987,

.default = .

))

)

return(adjusted)

}Let’s briefly explore the logic: Again, we are using across(), but we are introducing the ends_with() and starts_with() functions. They select columns based on some suffix or prefix. Because our columns follow a naming standard, we can use those functions here to avoid typing out all columns. For a quick refresh on our column names at this point in the (larger) function:

We are iterating over every column that ends with “oe,” which are our offensive efficiency columns, and those which end with “de,” our defensive efficiency columns. For example, this is the same thing typing `across(c(‘avg_opp_adj_oe’, ‘adj_oe’, ‘opp_adj_oe’))` but less typing!

Importantly, remember that our `game_location` column is in reference to the team, so a home game is an away one for the opponent and should be treated as such. Because of this, we need to not select the opponent columns — so we use `!starts_with(‘opp’)`, which removes all matching columns that start with ‘opp’.

To adjust opponent columns, we simply iterate over the opponent ratings columns using the same logic but switch our adjustment constant!

To apply the helper function, we just add it to our piping chain.

parse_wp <- function(team) {

# ... PREVIOUS CODE ...

adjust_efficiency()

# ... FUNCTION LOGIC WILL CONTINUE BELOW...

}Calculate per-game winning percentage

Pythag Metric

Now that we have our location-adjusted efficiencies, we are one step closer towards calculating win percentages. Barttorvik uses the Pythagorean expectation to estimate the win probability for a given game — a formula first created by Bill James for baseball. For our use, we need to calculate each team’s `pythag` with their location-adjusted efficiencies. The formula is:

We can just throw this into a mutate() step!

parse_wp <- function(team) {

# ... PREVIOUS CODE ...

mutate(team_pythag = (adj_oe^11.5) / (adj_oe^11.5 + adj_de^11.5),

avg_ap_pythag = (avg_ap_adj_oe^11.5) / (avg_ap_adj_oe^11.5 + avg_ap_adj_de^11.5),

opp_pythag = (opp_adj_oe^11.5) / (opp_adj_oe^11.5 + opp_adj_de^11.5))

# ... FUNCTION LOGIC WILL CONTINUE BELOW...

}Win probability

Now that we have ‘pythag’ values, we can calculate win probability for every game. The formula that Barttorvik uses is below and directly uses the values that we just appended.

Again, we can just throw this in mutate(). Importantly, we will only calculate win probabilities for each team and the average AP team; we do not care about opponent win probabilities. At this point, we have 17 columns in our data frame, but we only need three, so we will select the pertinent ones.

parse_wp <- function(team) {

# ... PREVIOUS CODE ...

team_wp = (team_pythag - team_pythag * opp_pythag) / (team_pythag + opp_pythag - 2 * team_pythag * opp_pythag),

ap_wp = (avg_ap_pythag - avg_ap_pythag * opp_pythag) / (avg_ap_pythag + opp_pythag - 2 * avg_ap_pythag * opp_pythag)) %>%

select(team, team_wp, ap_wp)

# ... FUNCTION LOGIC WILL CONTINUE BELOW...

}Our full function

Great — now our function is finished. Let’s put it all together:

parse_wp <- function(team) {

win_percentages <- schedule %>%

filter(home == team | away == team) %>%

mutate(

game_location = case_when(

home == team & neutral == 0 ~ 'home',

away == team & neutral == 0 ~ 'away',

.default = 'neutral'

),

team = team,

opponent = if_else(team == home, away, home),

avg_ap_adj_oe = mean(ap_top_25$adj_oe),

avg_ap_adj_de = mean(ap_top_25$adj_de)) %>%

left_join(ratings %>% select(team, opp_adj_oe = adj_oe, opp_adj_de = adj_de), join_by('opponent' == 'team')) %>%

left_join(ap_top_25 %>% select(-rank), by = 'team') %>%

adjust_efficiency() %>%

mutate(

team_pythag = (adj_oe^11.5) / (adj_oe^11.5 + adj_de^11.5),

avg_ap_pythag = (avg_ap_adj_oe^11.5) / (avg_ap_adj_oe^11.5 + avg_ap_adj_de^11.5),

opp_pythag = (opp_adj_oe^11.5) / (opp_adj_oe^11.5 + opp_adj_de^11.5),

team_wp = (team_pythag - team_pythag * opp_pythag) / (team_pythag + opp_pythag - 2 * team_pythag * opp_pythag),

ap_wp = (avg_ap_pythag - avg_ap_pythag * opp_pythag) / (avg_ap_pythag + opp_pythag - 2 * avg_ap_pythag * opp_pythag)

) %>%

select(team, team_wp, ap_wp)

return(win_percentages)

}If you’ve followed along to this point and have questions about what specific things do in the function, please reach out to me on Twitter! This is the first post in the series, so I am still figuring out what might be “too much” — or not enough! — explanation.

Loop over the function

Now that we have our function, we can “loop over it” to get data for each ranked team. For full transparency, I hate for-loops. I think they are unintuitive, clunky, and need precise writing to ensure optimization. (They aren’t necessarily bad to use, though!) Lucky for us, we can lean on the `map` family of functions in `purrr`. These mapping functions essentially apply a function(s) to each element in a vector or list. If you want to learn more about `purrr` — and I highly recommend that you do — check out this great introduction by package maintainer, and R GOAT, Hadley Wickham.

For our use, we are going to use map_dfr(). This function will iterate over the input vector (in this case, the teams in our `ap_top_25` data frame) and bind the rows of the result. In other words, it will output a single tibble with all data!

game_preds <- map_dfr(ap_top_25$team, \(team) parse_wp(team))Let’s walk through our code. map_dfr() takes a single input (map2_dfr will take two and pmap_dfr will take unlimited as a list). Our first argument is our input, which we will feed into our second argument (our function). Remember that we want to iterate over all preseason AP top 25 teams, and those are listed in the `team` column in `ap_top_25` frame — so we will pull those out using `ap_top_25$team`.

Let’s explore that “weird” syntax in the second argument. `\(team)` is essentially saying, “We want to reference our input vector as `team`.” We could have put basically any other thing here as our reference name — “school,” “program,” “university,” etc. All this is doing is telling our function what to expect our input vector to be referred to as in the function. After that, we simply call our `parse_wp` function and pass our input vector — named `team` — to the function and run it!

Summarize the results

Now that we have our game predictions, we can quickly add up the expected wins using summarize(). Expected wins can simply be calculated as the summation of win percentage. First, though, we need to remove all games with no win percentage (these are games versus non-D1 teams).

game_preds_summarized <- game_preds %>%

filter(!is.na(team_wp)) %>%

summarize(

team_wins = sum(team_wp),

ap_wins = sum(ap_wp),

diff = team_wins - ap_wins,

.by = team

)The `.by` argument is another way to “group” our summarization without writing a group_by() step. We want to group by each team to return the expected team wins and AP wins by team schedule. This will apply the set of calculations to each team instead of every row at once.

Add team logos and rank

To make our table easier to read, let’s add team logos to plot. You can grab a .CSV of team logos from my GitHub. Again, we are using an equality join between `team` and `common_team`. We are also adding a column to indicate AP preseason rank. We know this will simply be the row number of each team as they are already in order. `.before` is telling `mutate` where we want the rank column to appear.

Lastly, we will create a column that includes the HTML we need to correctly format the team logos and names column. The idea here is to place the team names to the right of their respective logo in a single column, with the text aligned in the middle relative to the logo. If you aren’t familiar with HTML, that’s completely okay. This step isn’t necessary for understanding how to make the visualization.

teams <- read_csv('https://gist.github.com/andreweatherman/cd2a258b7a75dc75cd86940e29f28af9/raw/12a2cd5f919a681ab4711c4b5082385bacd78c2e/teams.csv') %>%

select(common_team, logo)

game_preds_summarized <- game_preds_summarized %>%

left_join(teams, join_by('team' == 'common_team')) %>%

mutate(ap_rank = row_number(), .before = team) %>%

mutate(team_logo = glue::glue("<img src='{logo}' style='height: 25px; width: auto; vertical-align: middle;'> {team}"))What have we done in Part 2?

Part 2 is all about analyzing our data. Let’s break down what we’ve done.

Used case_when() to identify game location relative to `team`.

Introduced equality joins to merge on rating data.

join_by(“first_column” == “second_column”)

Defined a helper function using case_when() and selection helpers — starts_with() and ends_with() — to compute venue-adjusted ratings.

Applied pre-defined Pythag and win probability formulas.

Used map_dfr() to loop over our parse_wp() function and return results for all teams in a single tibble.

Summarized the resulting table per-team with the `.by` argument in summarize().

Brought in team logos for plotting using an equality join.

Part 3: Data Visualizing

Now for the fun part: Visualizing our data! For this visualization, we will be using the `gt` and `gtExtras` packages to create a table. For this section, I will break up the code in chunks, explaining each step, and include the final table code at the bottom.

Step 1: Basic table construction

game_preds_summarized %>%

gt(id='ap') %>%

gt_theme_excel() %>%

fmt_markdown(team_logo) %>%

cols_hide(c(team, logo)) %>%

cols_move(team_logo, after = ap_rank) %>%

cols_align(columns = c(team_wins, ap_wins, diff, ap_rank), align = 'center') %>%

cols_align(columns = team_logo, align = 'left') %>%

cols_label(

ap_rank = 'Rank',

team_logo = 'Team',

team_wins = 'TM Wins',

ap_wins = 'AP Wins',

diff = 'Diff.')A `gt` table can be initialized by passing data to gt(). Because we will be adding custom CSS at the end, we also need to pass an arbitrary table id to gt(). We will add on the appearance of our table later, but we can start with a base theme now, which will be gt_theme_excel() from the `gtExtras` package.

fmt_markdown() renders our HTML column from earlier. cols_hide(), cols_move(), and cols_align() simply hide, move, and align our columns. cols_label() renames our column headers in the form of `old_name` = “New Name”.

Step 2: Format numbers

game_preds_summarized %>%

# ... PREVIOUS CODE ...

fmt_number(columns = c(team_wins, ap_wins, diff), decimals = 2) %>%

gt_hulk_col_numeric(diff)fmt_number() will do a few things, but we are employing it to trim our columns to show only two decimals places. gt_hulk_col_numeric() uses a diverging purple-green color palette — one that is colorblind safe! — to fill the `diff` cell background based on its value relative to the range in the column.

Right now, our table looks like this: Pretty solid but we can still tweak a few things!

Step 3: Title, subtitle, and caption

game_preds_summarized %>%

# ... PREVIOUS CODE ...

tab_header(title = md('How Teams Stack Up to Avg. AP Top 25 Expectation'), subtitle = md('Venue-adjused team wins vs. expected wins by the average top 25 team')) %>%

tab_source_note(source_note = md('Data by Barttorvik<br>Analysis + Viz. by @andreweatherman'))tab_header() and tab_source_note() will allow us to add a title, subtitle, and caption (source note). Wrapping our text in md() lets us use Markdown-formatted text, which can be useful if we want to style text or insert purposeful line breaks (like we did in our source note).

Step 4: Table styles and options

game_preds_summarized %>%

# ... PREVIOUS CODE ...

# bold diff col. text

tab_style(

style = cell_text(weight = 'bold'),

location = cells_body(columns = diff)

) %>%

# control padding on header

tab_options(

heading.padding = 3,

table.font.size = 15

) %>%

# title font

tab_style(

locations = cells_title('title'),

style = cell_text(

font = google_font('Fira Sans'),

weight = 600,

size = px(19)

)

) %>%

# subtitle font

tab_style(

locations = cells_title('subtitle'),

style = cell_text(

font = google_font('Fira Sans'),

weight = 400,

size = px(14)

)

) %>%

# source note font

tab_style(

locations = cells_source_notes(),

style = cell_text(

font = google_font('Fira Sans'),

weight = 400,

size = px(12)

)

) %>%

# columns labels

tab_style(

locations = cells_column_labels(columns = everything()),

style = cell_text(

font = google_font('Fira Sans'),

weight = 600,

size = px(14),

transform = 'uppercase',

align = 'center'

)

) %>%

# table font

opt_table_font(

font = google_font('Fira Sans'),

weight = 450

)This might seem like a lot of code — but most of it is just repeating styles for different sections!

The first tab_style() is bolding the text in the `diff` column. We can refer to a particular column’s values by referencing it in `cells_body`. The second tab_style() adjusts the padding and table font size.

The next three tab_style() calls specifies the font family, weight, and size of text in our title, subtitle, and source note. The google_font() function allows us to easily use a font from Google Fonts without having to download it. Feel free to play around with different fonts; I am using Fira Sans for this visualization.

We next modify the column labels’ font, weight, size, alignment and force them to be capitalized. We indicate that we want to change all column headers by using everything() inside cells_column_labels(). Finally, we set the table font to Fira Sans.

Step 5: Custom CSS

game_preds_summarized %>%

# ... PREVIOUS CODE ...

opt_css(

css = "

#ap .gt_heading {

padding-bottom: 0px;

padding-top: 6px

}

#ap .gt_subtitle {

padding-top: 2px;

padding-bottom: 6px;

}

"

) To put finishing touches on our table, we add brief CSS. All this does is adjust the padding on the header and subtitle: The padding was bugging me. Importantly, this only works because we set a table id at the start of our code — which we now reference here (#ap …).

Full table code and saving the table

All together, here is our final table code! We can place gtsave_extra() at the end of our code to save our table!

game_preds_summarized %>%

gt(id='ap') %>%

gt_theme_excel() %>%

fmt_markdown(team_logo) %>%

cols_hide(c(team, logo)) %>%

cols_move(team_logo, after = ap_rank) %>%

cols_align(columns = c(team_wins, ap_wins, diff, ap_rank), align = 'center') %>%

cols_align(columns = team_logo, align = 'left') %>%

cols_label(

ap_rank = 'Rank',

team_logo = 'Team',

team_wins = 'TM Wins',

ap_wins = 'AP Wins',

diff = 'Diff.') %>%

fmt_number(columns = c(team_wins, ap_wins, diff), decimals = 2) %>%

gt_hulk_col_numeric(diff) %>%

tab_header(title = md('How Teams Stack Up to Avg. AP Top 25 Expectation'), subtitle = md('Venue-adjused team wins vs. expected wins by the average top 25 team')) %>%

tab_source_note(source_note = md('Data by Barttorvik<br>Analysis + Viz. by @andreweatherman')) %>%

tab_style(style = cell_text(weight = 'bold'), location = cells_body(columns = diff)) %>%

tab_options(heading.padding = 3, table.font.size = 15) %>%

tab_style(

locations = cells_title('title'),

style = cell_text(

font = google_font('Fira Sans'),

weight = 600,

size = px(19)

)

) %>%

tab_style(

locations = cells_title('subtitle'),

style = cell_text(

font = google_font('Fira Sans'),

weight = 400,

size = px(14)

)

) %>%

tab_style(

locations = cells_source_notes(),

style = cell_text(

font = google_font('Fira Sans'),

weight = 400,

size = px(12)

)

) %>%

tab_style(

locations = cells_column_labels(columns = everything()),

style = cell_text(

font = google_font('Fira Sans'),

weight = 600,

size = px(14),

transform = 'uppercase',

align = 'center'

)

) %>%

opt_table_font(

font = google_font('Fira Sans'),

weight = 450

) %>%

opt_css(

css = "

#ap .gt_heading {

padding-bottom: 0px;

padding-top: 6px

}

#ap .gt_subtitle {

padding-top: 2px;

padding-bottom: 6px;

}

"

) %>%

gtsave_extra('ap_25_expected.png')What have we done in Part 3?

Part 3 is all about visualizing our data. Let’s break down what we’ve done.

Initialized our table using gt() and piping in the summarized data.

Applied a basic table theme using gt_theme_excel().

Rendered in our HTML content using fmt_markdown().

Adjusted our columns using cols_hide(), cols_move(), and cols_align() and renamed column headers with cols_label().

Truncated numeric columns using fmt_number() and applied a colorblind-safe conditional fill with gt_hulk_col_numeric().

Added a table title, subtitle, and caption with tab_header() and tab_source_note().

Modified the font, weight, and size of our column headers, table text, and titles with tab_style().

Applied custom CSS using opt_css() to adjust padding.

Saved our table using gtsave_extra().

Full Source Code

The full source code is hosted here on GitHub.

If you found value in today’s post, I kindly ask that you please subscribe to Buckets & Bytes and consider sharing the blog with others. Subscribing is entirely free! It just ensures that you never miss a post. Sharing is never expected but always appreciated. It helps me understand what content is most valuable.